Link : https://arxiv.org/pdf/2406.04692

Notes

- To harness the collective expertise of multiple LLMs.

- leverage the collective strengths of multiple LLMs through a Mixture-of-Agents (MoA) methodology

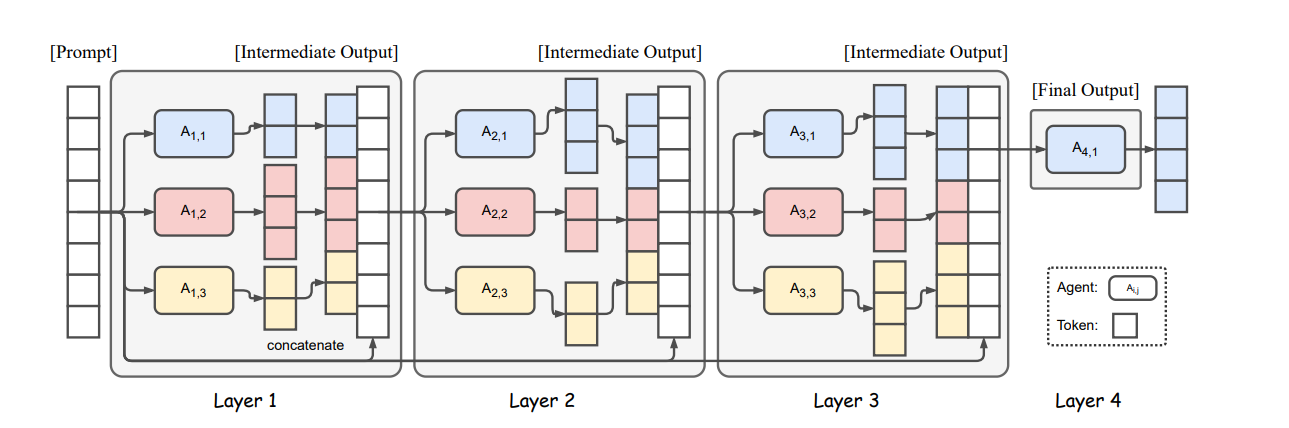

- layered MoA architecture where each layer comprise multiple LLM agents.

- Each agent takes all the outputs from agents in the previous layer as auxiliary information in generating its response.

Reason

- Different LLMs possess unique strengths and specialize in various tasks aspects.

Finding

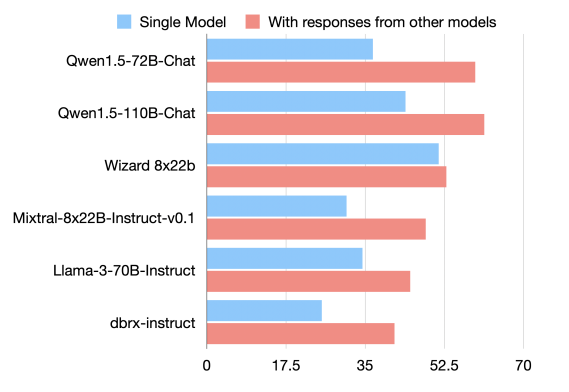

- collaborativeness of LLMs - wherein an LLM tends to generate better responses when presented with outputs from other models, even if there other models are less capable by itself.

- AlpacaEval 2.0 LC win rates improve when provided with responses from other models

Architecture

- Initially, LLMs in the first layer generate responses to a given prompt.

- These responses are then presented to agents in the next layer (which may reuse a model from the first layer) for further refinement.

- This iterative refinement process continues for several cycles until obtaining a more robust and comprehensive response.

Selection Process of LLM for layer

- Performance Metrics : The average win rate of models in layer i

- Diversity Considerations : The diversity of model outputs is also crucial. Responses generated by heterogeneous models contribute higher.

Contributions of paper

- Novel framework : Mixture-of-Agents framework

- Finding of collaboratives of language models : highlight the inherit collaborativeness among LLMs

- State-of-the-art LLM performance : extensive experiments on benchmarks such as AlpacaEval 2.0, MT-Bench, and FLASK

Math

= given input prompt = output of MoA layer = LLM at layer = concatenation of texts = Aggregate-and-Synthesize prompt

Aggregate-and-Synthesize Prompt

You have been provided with a set of responses from various open-source models to the latest user query. Your task is to synthesize these responses into a single, high-quality response. It is crucial to critically evaluate the information provided in these responses, recognizing that some of it may be biased or incorrect. Your response should not simply replicate the given answers but should offer a refined, accurate, and comprehensive reply to the instruction. Ensure your response is well-structured, coherent, and adheres to the highest standards of accuracy and reliability. Responses from models:

- [Model Response from ]

- [Model Response from ]

- … n.

- [Model Response from ]

Results

AlpacaEval 2.0 LC win rates improve when provided with responses from other models.